Non-Destructive PVC Namespace Move with Longhorn on K8s

K3s and Longhorn

I’ve long run a server (or servers) in my home. There’s been many different tech stacks used over the years, but I’ll save that saga for other posts.

Today, without even providing the backstory for my current HomeLab adventures with K8s (Kubernetes), I’m going to dive into the specifics of a storage related problem and my quick and dirty solution.

However, I suppose it’s at least important to know I’m running K3s, a lightweight Kubernetes distribution, and for my magical storage solution on Kubernetes, I’m running Longhorn.

Problem

Though I’ve used Kubernetes in the past, running one’s own cluster is different. I’m responsible for all my own organization, so it’s no surprise I did soemthing which I wanted to change later.

I initially installed most of my apps to the default namespace. Didn’t matter if I

installed via manual yaml or Helm chart, it was just … the default.

After a while I realized I wanted to organize things a big more. Home Automation apps should be in a dedicated namespace, and a few core services should be in one also.

The problem here is, I’d created PersistentVolumes (PV) and PersistentVolumeClaims (PVC)

in the default namespace since the Pods attaching to the PVC must be in the

same namespace.

Moving these seemed daunting. For my first attempt, I actually created a

new PVC/PV in the new namespace, then used a Job Pod to rsync data between the two.

That was far too much effort, so I wanted a better solution.

I found a few different suggestions online regarding moving a PV to a different namespace, but I wanted it to work “magically” without having to change my current YAML PVC definitions to use an existing PV or PVC. Plus I wanted my Longhorn managed volumes to coninute working as is.

Solution

Here’s what I did, and as an example, I’ll move my Gitea app from the default

namespace to core.

Start State

For starters, I have this YAML to define my PVC:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: gitea

spec:

accessModes:

- ReadWriteOnce

storageClassName: longhorn

resources:

requests:

storage: 2Gi

Note there is no namespace: field in the metadata, thus, if I’m lazy and didn’t specify

kubectl apply --namespace core on CLI when I created this, of course it would be created

in the default namespace, which it was.

So, inspecting my environment we see:

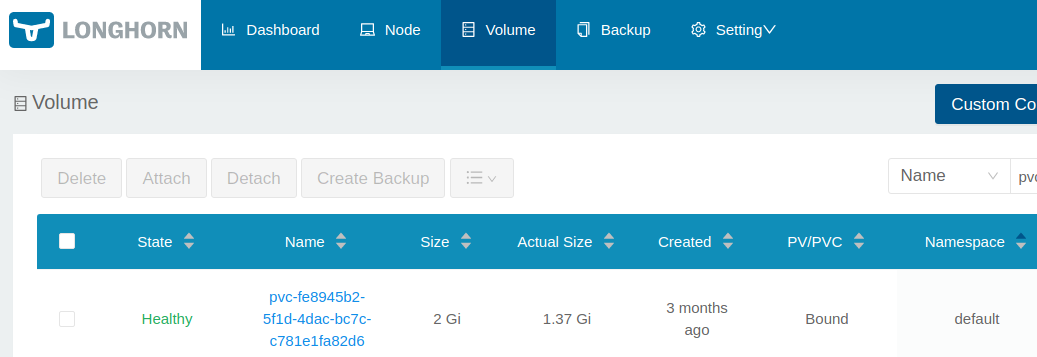

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

gitea Bound pvc-fe8945b2-5f1d-4dac-bc7c-c781e1fa82d6 2Gi RWO longhorn 103d

$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-fe8945b2-5f1d-4dac-bc7c-c781e1fa82d6 2Gi RWO Delete Bound default/gitea longhorn 102d

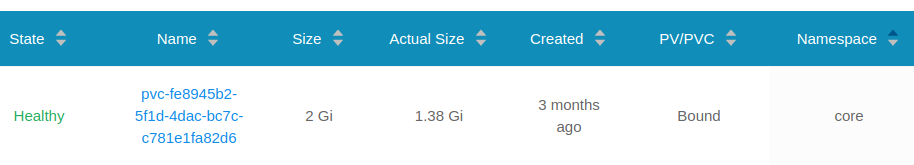

And we can also look at this via the Longhorn dashboard UI:

Make Some Changes

Now it’s time to make the changes.

Safety First

First, I’m going to use Longhorn’s UI to trigger a current backup of the volume. This needs to be done while the Pods are still attached.

Second, modify the PV above to have Retain as its Reclaim Policy. This is how we can prevent the

volume from being deleted.

export PV=pvc-fe8945b2-5f1d-4dac-bc7c-c781e1fa82d6

kubectl patch pv "$PV" -p '{"spec":{"persistentVolumeReclaimPolicy":"Retain"}}'

# confirm it's no longer set to `Delete`

$ kubectl get pv $PV

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-fe8945b2-5f1d-4dac-bc7c-c781e1fa82d6 2Gi RWO Retain Bound default/gitea longhorn 103d

Out with the Old

Time to remove the old resources.

Since I’ve manually defined all my Gitea resources in a single YAML file, I can just do:

$ kubectl delete -f resource-defs-gitea.yaml

persistentvolumeclaim "gitea" deleted

deployment.apps "gitea" deleted

service "gitea-svc" deleted

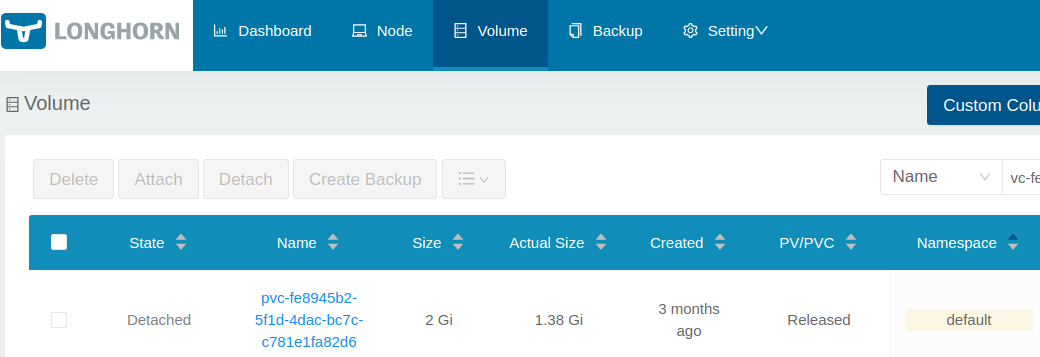

But we can see our PV is NOT deleted, just the PVC.

$ kubectl get pv $PV

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-fe8945b2-5f1d-4dac-bc7c-c781e1fa82d6 2Gi RWO Retain Released default/gitea longhorn 103d

Also this can be seen in the Longhorn UI:

In with the New

It’s time to create the new namespace:

$ kubectl create namespace core

namespace/core created

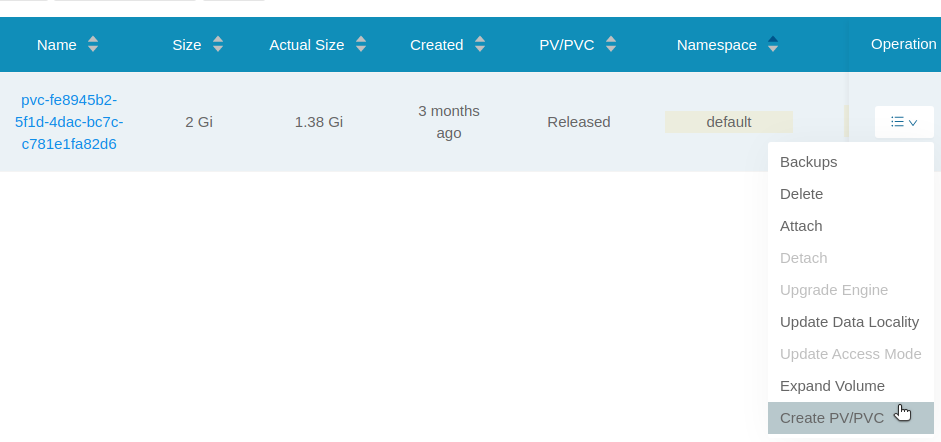

Without further ado, the magic. In the Longhorn UI, we’ll recreate the PVC.

- While looking at the list of volumes, for our desired volume, select

Create PV/PVCfrom theOperationsmenu dropdown

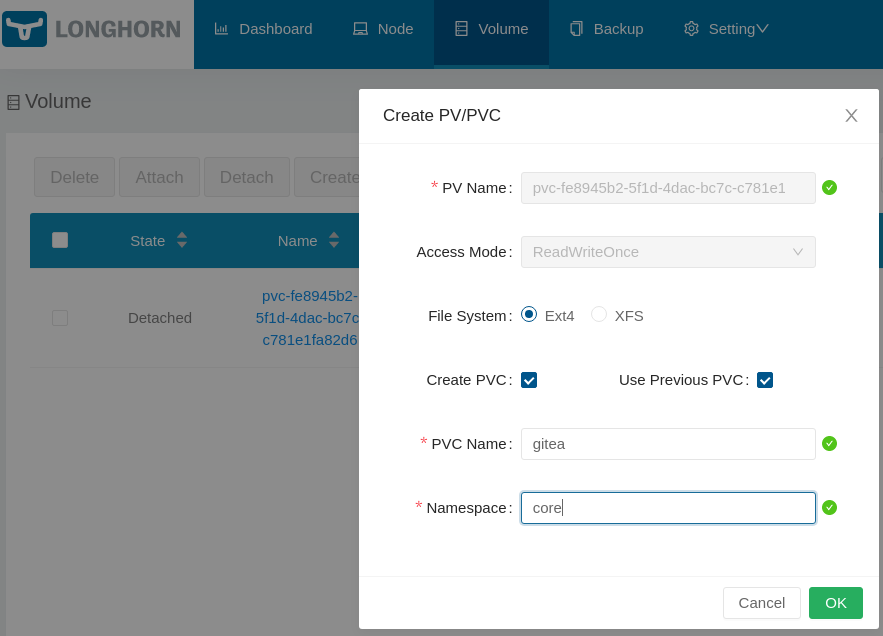

- In the following pop-up menu:

- check

Create PVC - check

Use Previous PVC - keep the original PVC name, in my case

gitea - change the Namespace from

defaulttocore(our new desired NS).

- Click

OK

Optionally update the YAML definitions to include a namespace. You could just use the namespace flag on the CLI, but I’ll be a bit more self-protective and update my YAML, showing just the PVC here. Ensure the updated YAML has the same namespace for all resources (Deployment/StatefulSet/etc/PVC).

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: gitea

namespace: core

spec:

accessModes:

- ReadWriteOnce

storageClassName: longhorn

resources:

requests:

storage: 2Gi

Now, we recreate our app resources with the YAML.

$ kubectl apply --namespace core -f resource-defs-gitea.yaml

Warning: resource persistentvolumeclaims/gitea is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

persistentvolumeclaim/gitea configured

deployment.apps/gitea created

service/gitea-svc created

Note: we expect the warning above as we essentially asked kubectl to create an already existing PVC resource.

End State

Now Gitea is running happily again on its volume with the PVC moved from default to core

namespace. No data loss and minimal changes to my existing resource YAML.